A cache is worth a thousand searches

Hybrid meetup #38 took place 2023-11-20 19:00 at CHECK24 Leipzig Office.

We had a great presentation from Fabian about building a mission critical query caching database (MemoryDB) in Go and the challenges involved.

One challenge is the variety of query parameters and nearly overlapping values and ranges. A key for performance is to express various parameter values (e.g. date boundaries) with the help of BitMaps, e.g. Roaring Bitmaps.

Bitmap indexes are commonly used in databases and search engines. By exploiting bit-level parallelism, they can significantly accelerate queries. – RoaringBitmap.pdf

Among other things, the project also uses tableflip - a library that allows you to

update the running code and / or configuration of a network service, without disrupting existing connections.

A very practical concern has been the struct design for GC-friendlyness. Pop quiz:

- Is the following struct GC-friendly?

- When would it become a problem?

- What could be improved?

// Offer, abridged.

type Offer struct {

HomeAirport *Airport

DestinationAirport *Airport

Hotel *Hotel

RoomType string

MealType string

Airline string

DepartureTime time.Time

ReturnTime time.Time

}

If possible, one can try to use stack allocated values (note: Go ref/spec never mentions to stack or heap, as these concepts are abstracted by the language):

// Offer, reduced, abridged.

type Offer struct {

HomeAirportID int

DestinationAirportID int

HotelID int

RoomType RoomTypeEnum

MealType MealTypeEnum

AirlineID int

DepartureTime int64

ReturnTime int64

}

This is now a much more compact, GC-friendly struct that will require additional object lookups for respective identifiers but would reduce GC load significantly, when dealing with millions of objects. Simple, effective.

Testdriving OLLAMA

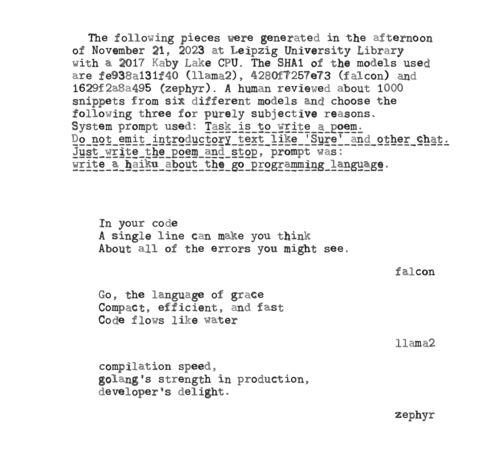

A lightning talk was concerned with Testdriving OLLAMA - a packaging tool for large language model files. Ollama is inspired by docker and allows to wrap LLM customizations (parameters, context) into a easy to distribute format.

Thanks to projects like LLAMA and llama.cpp it is possible to experiment with LLMs on everyday hardware, e.g. a 15W TDP 2017 CPU.

Thanks!

Thanks CHECK24 for hosting Leipzig Gophers November 2023 Meetup, Fabian for the great talk and Florian for the excellent event organisation.

Are you using an interesting data structure like bitmaps to improve performance? Then join our meetup and tell us about it!